Tamas E. Doszkocs

National Library of Medicine

Bethesda, Maryland, USA

Keywords: Artificial Neural Networks, Connectionist Models, Parallel Distri- buted Processing, Intelligent Information Retrieval, Associative Processing, Machine Learning, Library and Information Technologies.

Historically, even the most imaginative library automation efforts have suffered from the fundamental limitation of traditional information processing approaches, namely their inability to manifest human-like behavior, such as learning, intelligence, adaptation and self-improve-

ment over time in response to changes in and interaction with their environment.

From the earliest days of computing, many researchers, from computer scientists and neurophysiologists to librarians and philosophers, have been interested in creating computers that mimic human behavior and excellence in handling perceptual and cognitive tasks that are easily accomplished by people, including young children, yet are handled clumsily, if at all, by conventional computing techniques. Familiar examples include recognizing faces, learning one's native tongue or a foreign language, and recalling complete information from fragmen-tary clues. Such basic capabilities have been the goal of artificial neural networks, also known as "connectionist models," and "parallel distributed processing".

In a 1990 survey, (Doszkocs, Reggia, & Lin, 1990) described emerging R & D applica-tions of neural network technology in library settings.

2. ARTIFICIAL neural networks

Artificial neural networks represent a general computational methodology with a radically different approach to dealing with information. These types of systems accomplish processing through their information structure rather than explicit computer programming and manipula-tion of data. In a sense, von Neumann processing is procedural, while neural network processing is intuitive, much like the difference between logical thinking vs the coordination of complex bodily movements, such as in humming a favorite tune while preparing Hungarian goulash.

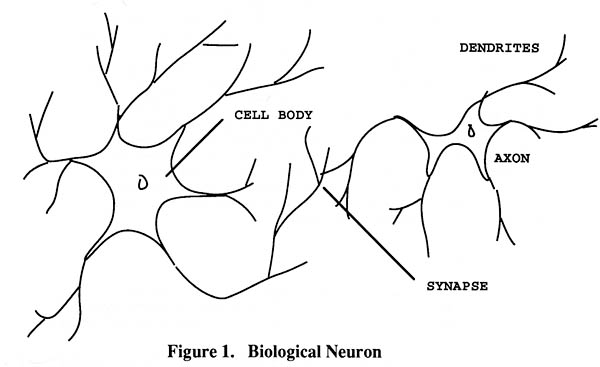

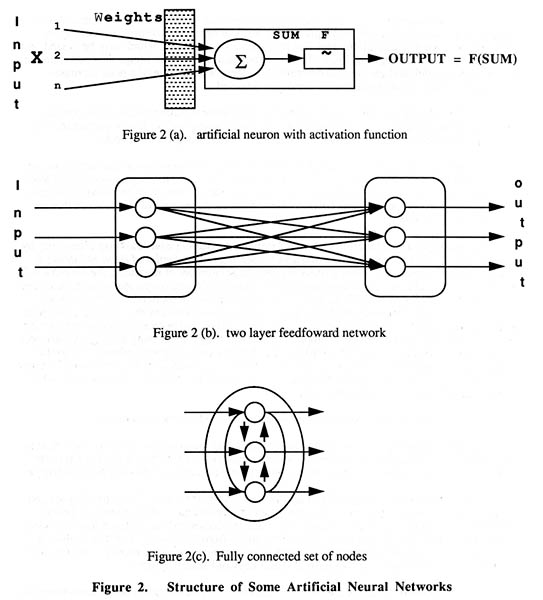

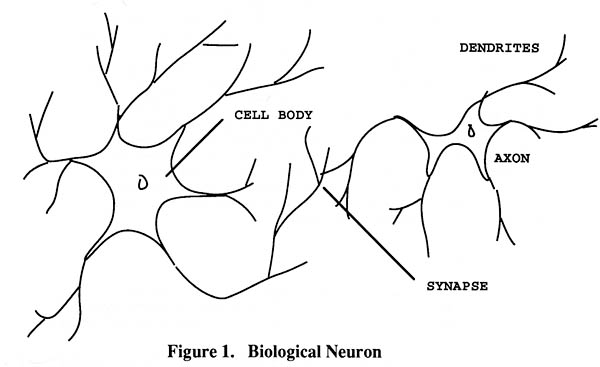

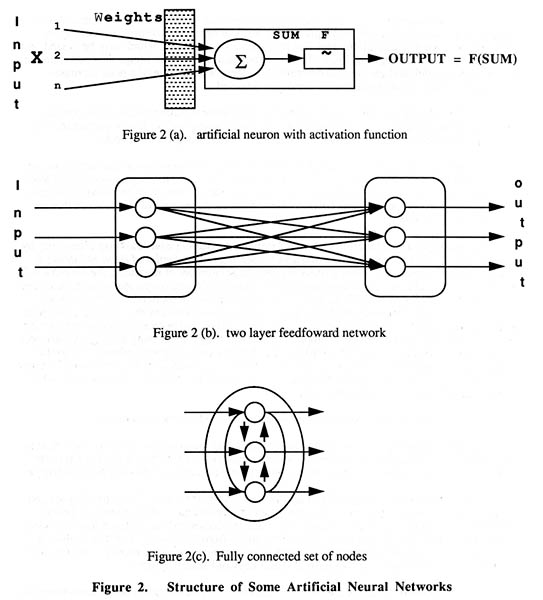

As shown in Figures 1 and 2, an artificial neural

network superficially resembles a

grossly simplified model of the network of biological neurons in the human brain (Wasserman, 1989). The three main components of a typical connectionist model are:

1. The network, a set of simple processing elements (nodes) connected together via directed (and weighted) links. Each processing element receives and sums a set of inputs, resulting in a specific activation level, and produces a single output, which may be passed on to

neighboring nodes through the links. The links may denote different things depending on the intent of the model, and the weights determine whether the activation of a node increases, decreases or remains unchanged after inputs are received.

2. The activation rule, a local action that each node simultaneously carries out in updating its activation level following input from neighboring nodes. It is important to note that massive parallelism is involved as activation spreads through the network.

3. The learning rule (or adaptation rule), which means any change that occurs in the behavior of the model over time. A learning rule describes how the weights should be altered on the links depending on the current weight value and the activations of the nodes to which it connects. Typically, connections are strengthened between elements of the network that are simultaneously active.

Learning is a crucial ability of neural networks. Supervised learning ("teaching by doing") implies that the network is trained by presenting to it examples of input and desired output from a training set. After a (possibly very large) number of iterations, the network will be trained and is able to accept new input and produce the desired (expected) output. An exam-ple is NETtalk (Sejnowski & Rosenberg, 1987), a connectionist model that learns to read

printed text aloud. Unsupervised learning ("self teaching") means that the neural network can automatically detect regularities in input patterns and is able to group patterns with similar structure into the same category. Computer vision and image processing applications are typi-cal examples. Reinforcement Learning ("teaching by critiquing or feedback") is useful when the full set of desired outputs is unavailable or not fully specified but the human "teacher" of the system can provide reinforcement signals of "good" vs "bad" to guide to neural net's weight adjustments, e.g. in machine translation.

Artificial neural networks have three very important additional capabilities:

(a) generalization, i.e. being able to accommodate variations in input and still be able to produce the correct output. An object can be recognized even when the system has never seen the same exact object before or if only partial input or partly erroneous input is available;

(b) abstraction, i.e.being able to abstract the "ideal" from a non-ideal training set and form an internal representation of the training set's salient features. For instance, a neural network can be trained with less than perfect character shapes and can then produce the "ideal" letter A or B or C etc.. It can also "separate the sheep from the goats", as in distinguishing documents on crisis management from those on management crisis, or management by crisis and crisis by management, unlike most Boolean keyword search systems;

(c) parallelism, i.e. all processing elements acting upon the information simultaneously thus achieving very high execution speeds (Stanfill 1986).

Neural networks are self processing in that no external program operates on the net-work. The network literally shapes and dynamically reorganizes itself, with "intelligent behavior" emerging from the local interactions that occur between the numerous components through their complex interconnections in response to external inputs.

Like with all promising technologies, there is a temptation and danger to hype neural networks and anthropomorphize their capabilities of learning, generalization and abstraction beyond all reason. To put things in perspective: there are approximately 1,000 interconnections in a worm's brain, thousands to millions of interconnections in existing artificial neural net-works and 100 billion in the human brain. Despite their relatively long history (over 5 decades of R & D work) and reversals of fortune and popularity, neural networks are still in their infancy. Nonetheless, very successful connectionist models and applications are increasingly available or being developed, e.g. visual and non-visual pattern matching, automated inspection of faulty products, oil drilling data evaluations, detection of explosives and check signatures, electronic eyes and ears and genetic fingerprinting for personnel identification, robotics sensor-controls, speech recognition and synthesis, error detection and correction in modems and com-munications networks, credit risk analysis, natural language processing and translation, asso-ciative database retrieval, special sensory aids for the deaf and the blind through translation of auditory and visual signals to transcutaneous stimulation and many other applications.

3. Library Applications of Neural Networks

Today's computerized library applications lack many desirable features that could be potentially supported by very large scale neural networks by virtue of their inherent abstraction, generalization and massive parallelism, such as the ability to:

• self organize and automatically restructure the database

• deal with incomplete or imprecise queries

• .support natural language interfaces

• .resolve semantic ambiguities and complexities in queries

• .perform closest (partial) match non-Boolean searches

• .provide ranked output of relevant items

•. organize the database according to user preferences

• .accept relevance and other feedback from the user

• .learn document, term and query distribution patterns

• .exploit implicit and explicit associations in the database

•. cope with the combinatorial information explosion

•. offer "intelligently synthesized" output (answers, summaries)

•. discover and suggest new relationships and hypotheses.

Research and development work in information retrieval and specifically, the use of conventional statistical, probabilistic and artificial intelligence methods and techniques, have demonstrated the feasibility of supporting many of the above capabilities on a limited scale in static information environments (Doszkocs 1983; Salton 1989). Recent R & D efforts in the area of dynamic neural network models for library and information retrieval applications have been reviewed by (Doszkocs, Reggia, & Lin 1990). Of particular importance are the pioneer-ing work of (Mozer 1984), (Belew 1989) and (Kwok 1989) in exploring the use of neural networks for information retrieval, the exploratory research of (Lin 1989) who developed a fuzzy model of document representation and the efforts of (Kimoto 1990) whose neural network thesaurus development continues a long line of ideas and research on associative vocabulary displays to aid searching, indexing and classification (Bush 1945), (Doyle 1961), (Stiles 1961) and (Doszkocs 1978).

The future potential of neural networks can be sensed by envisioning very large connec-tionist models that fully exploit the wealth of primary, secondary, tertiary and higher order associations in what would essentially serve as mankind's collective associative memory, as presaged by H.G. Wells' World Brain.

There is a rich web of direct and indirect associations in bibliographic databases among:

documents • index terms • classification codes • queries

and other units of information (e.g. authors, journal titles, chemical names and structures, disease manifestations, symptoms and treatment protocols, etc). The importance and utility of some of these associations has been long recognized and exploited via conventional technics. The Science Citation Index and the Social Science Citation Index are well-known examples of forward and backward linkages among citing and cited authors and references. Hypertext and hypermedia database links allow authors and searchers to establish and exploit explicit connec-tions among pieces of information and items of interest. Neural networks of the future will enable us to fully explore and utilize the practically infinite conceptual weavings between

• Document * Document [content associations]

• Document * Index Term [ concept associations]

• Document * Classification [automatic classification/cataloging]

• Document * Query [retrieval associations]

• Index Term * Index Term [automatic thesaurus construction]

• Index Term * Classification [automatic classification]

• Index Term * Query [retrieval impact, query expansion]

• Classification * Classification [automatic self organizing]

• Classification * Query [retrieval of like items and like topics]

• Query * Query [DB impact; query enhancement]

4. Conclusion

Undoubtedly, neural networks will be integrated with other information technologies and artificial intelligence approaches to offer powerful solutions to librarians and library users. Perhaps the most exciting promise of such hybrid neural networks is their potential to enable future systems to provide intelligent, fully synthesized answers to user queries, instead of just references and original source materials, and aid people in their discovery and creativity by detecting and suggesting new conceptual associations and hypotheses and promoting serendipitous insights.

References

Belew, R. K., "Adaptive information retrieval: Using a connectionist representation to retrieve and learn about documents," In ACM SIGIR Proceedings, 1989. pp. 11-20.

Bish, V., "As we may think," Atlantic Monthly, 176 (1): 101-108 (April 1945).

Doszkocs, T. E. AID, An associative Interactive Dictionary for Online Bibliographic Searching (Doctoral Dissertation). College Park, MD: University of Maryland, 1979. 124 pp. (University Microfilms order: 79-25741).

Doszkocs, T. E., Reggia, J., & Lin, X., "Connectionist models and information retrieval," In Annual Review of Information Science and Technology (ARIST), 25: 209-260, 1990.

Doyle, L. B. "Semantic road maps for literature searchers," Journal of ACM, 8: 553-578 (1961).

Kimoto, H. & Iwadera, T., "Construction of a dynamic thesaurus and its use for associated information retrieval," InACM SIGIR Proceedings, 1990. pp. 227-240.

Kwok, K. L., "A neural network for probabilistic information retrieval," In ACM SIGIR Proceedings, 1989. pp. 21-30.

Lin, X., "A fuzzy model of document representation based on neural nets," Paper presented in ASIS ’89 Doctoral Research Forum. Available from the author, College of Library and Information Services, University of Maryland, College Park, MD 20742.

Mozer, M. C. Inductive Information Retrieval Using Parallel Distributed Computation. Research Report. San Diego, CA: University of California at San Diego, June 1984.

Salton, G. Automatic Text Processing: The Transformation, Analysis, and Retrieval of Information by Computer. Reading, MA: Addison-Wesley, 1989. 530 pp.

Sejnowski, T. J. & Rosenberg, C. R., "Parallel networks that learn to pronounce English text," Complex Systems; 1(1): 145-168 (1987).

Stiles, H. E., "The association factor in information retrieval," Journal of the Association for Computing Machinery, 8 (2): 271-279 (1961).

Stanfill, C. & Kahle, B., "Parallel free-text search on the connection machine system," Communications of the ACM, 29: 1229-1239 (1986).

Wasserman, P. D. Neural Computing: Theory and

Practice. New York, NY: Van Nostrand Reinhold, 1989. 230 pp.